Would you trust a machine to translate for you at a business meeting, or on a date? Would you let a machine read your thoughts to ‘talk’ without words? These and other likely near-future scenarios are explored in a series of animations produced by LITHME. The videos illustrate transformative technologies that will soon reshape the way we use language. Viewers are invited to participate in LITHME’s international survey to share their views, and to see what others around the world are saying.

Augmented and Virtual Reality are improving fast, embedded into smart glasses and other wearable devices. Technology will soon move from handheld mobile devices into our eyes and ears. Such immersive technologies will increasingly mediate the language we see, hear, and produce. In the longer term, developments in brain-machine interfaces will enable interaction without ‘speaking’ at all. Are we ready for this? As language is often thought of as something that defines us as humans, would we think differently about ourselves and the languages we use?

On the back of a 2021 public report showing the likely evolution of new language technologies, LITHME has now released four short animations illustrating some of these future scenarios. These are designed to get people thinking about what life will be like when such transformative technologies are widespread.

Together with the animations, LITHME has launched an online survey to gauge public views on these topics. Each animation has a section in the survey, and viewers can complete as many as they want. The animations and survey are available at https://lithme.eu/animations.

‘The aim of the videos and the accompanying survey is to challenge the public to think more actively and critically about technology-driven changes that lie ahead. We hope the viewers can personally relate to these visual stories that reflect the potential societal influence of language technologies currently being prototyped,’ comments the science communication coordinator of LITHME, Kais Allkivi-Metsoja (Tallinn University, Estonia). ‘The survey serves to inform future research and will hopefully help us facilitate a closer dialogue between linguists and technology developers,’ she adds.

Chair of LITHME Dave Sayers (University of Jyväskylä, Finland) notes that LITHME is ‘here to predict but not to cheerlead’. ‘We’re interested in the positives but also the ways some people will be left behind – for example deaf people, communities speaking smaller languages, and the many people who simply couldn’t afford these new gadgets,’ he explains. LITHME involves a wide range of tech developers, linguists, and social scientists. ‘Together we’re aiming for a balanced view of the human-machine era, to raise awareness of both the pluses and minuses, and try to get those critical conversations happening early,’ Sayers adds.

LITHME Vice-Chair Sviatlana Höhn (University of Luxembourg) also notes: ‘New technologies open up new ways to influence populations, but we do not know how and at what scale this will happen. So we must be prepared, and policy makers need to foresee and prevent potential damages. We can support them with the insights we are developing.’

The videos have been made in collaboration with the creative agencies Kala Ruudus (Estonia) and The Loupe (Luxembourg). A number of researchers from LITHME participated in the content development of the animations.

The animations as well as the survey cover the following topics.

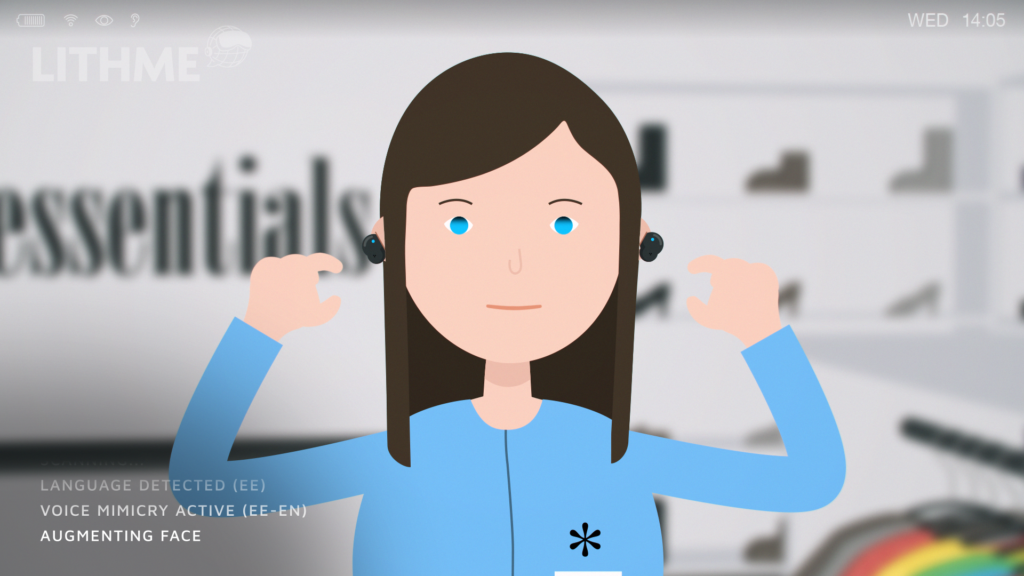

Augmented conversation and automated translation

Future improved Augmented Reality glasses could seamlessly alter the voices around you, while giving you additional information about your surroundings. This device would translate people speaking other languages in real time, and change the visual appearance of their mouth movements in front of you, to match the translation.

Foreign language learning in Virtual Reality

If language learning happened in Virtual Reality, it would be possible to interact with intelligent virtual characters equipped with improved machine translation and speech technology. These characters could teach you and talk to you in simulated ‘normal’ settings. They would never get bored or impatient, and would happily repeat questions and phrases at your pace.

Accessibility and equality in new language technologies

This video shows some possible inequalities with future language technologies. At first these technologies will probably be better in languages with a lot of digital resources (e.g., English, Spanish) than other, ‘smaller’ languages (e.g., Basque, Yoruba), and completely unavailable in even less resourced languages. And, for many more years, the tech will be much less effective for sign language.

Mind-reading technology, and talking without speaking

What if you didn’t have to speak or move at all to communicate? Brain-machine interfaces may be the hardest to see coming, but the prototypes for translating brain signals into words are already there. As the tech develops, this could work with fairly small sensors attached to the head, or hidden sensors elsewhere. If we can communicate with each other, and with machines, without saying a word, would we continue using language at all?